XGBoost and LightGBM are the packages belong to the family of gradient boosting decision trees GBDTs. AdaBoost Adaptive Boosting AdaBoost works on improving the.

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

Here is an example of using a linear model as base learning in XGBoost.

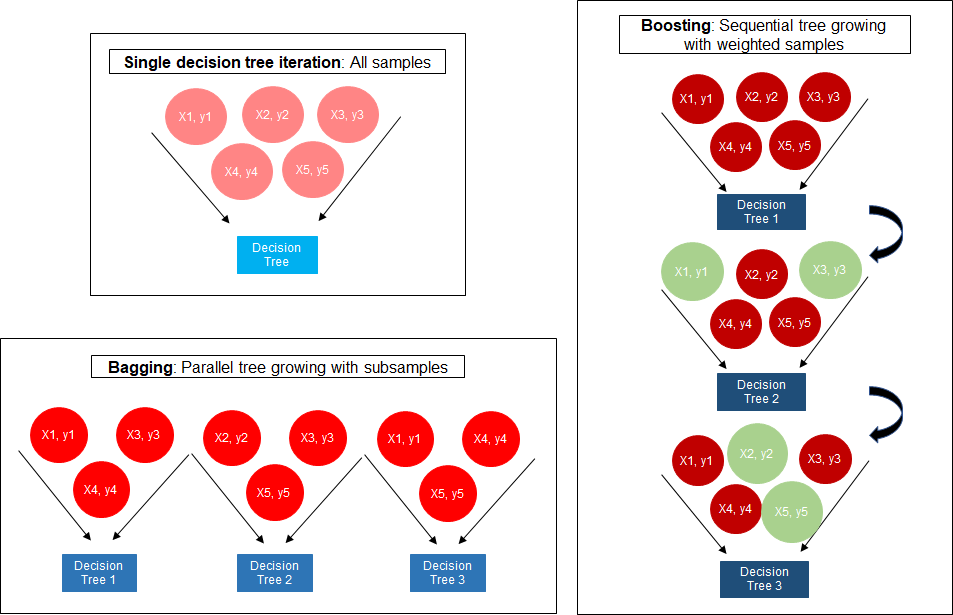

. In the Random Forests part I had already discussed the differences between Bagging and Boosting as tree ensemble methods. We can use XGBoost to train the Random Forest algorithm if it has high gradient data or we can use Random Forest algorithm to train XGBoost for its specific decision trees. It can be a tree or stump or other models even linear model.

In this blog we will go over the fundamental differences between XGBoost and LightGBM in order to help us in our machine learning experiments. A Gradient Boosting Machine. XGBoost or eXtreme Gradient Boosting is an efficient implementation of the gradient boosting framework.

The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor. Boosting builds models from individual so called weak learners in an iterative way. Its training is very fast and can be parallelized distributed across clusters.

In this article we list down the comparison between XGBoost and LightGBM. Visually this diagram is taken from XGBoosts documentation. The different types of boosting algorithms are.

XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. The training methods used by both algorithms is different. Well compare XGBoost LightGBM and CatBoost to the older GBM measuring accuracy and speed on four fraud related datasets.

3 rows Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of. Whats their special. In the Random Forests part I had already discussed the differences between Bagging and Boostingas tree ensemble methods.

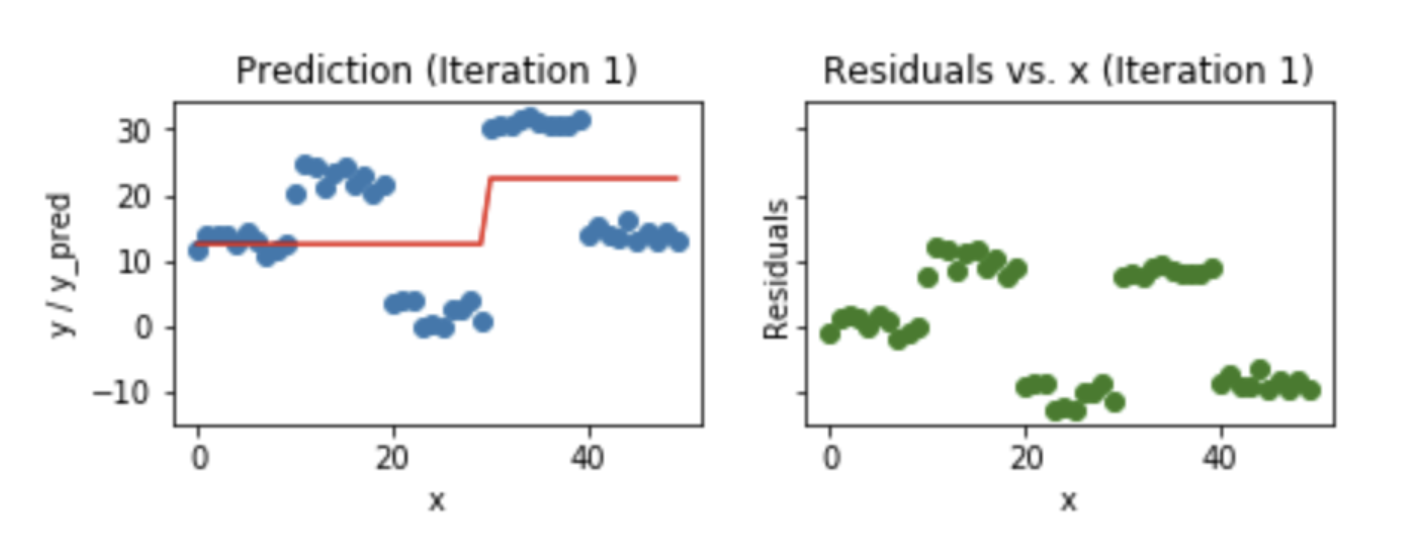

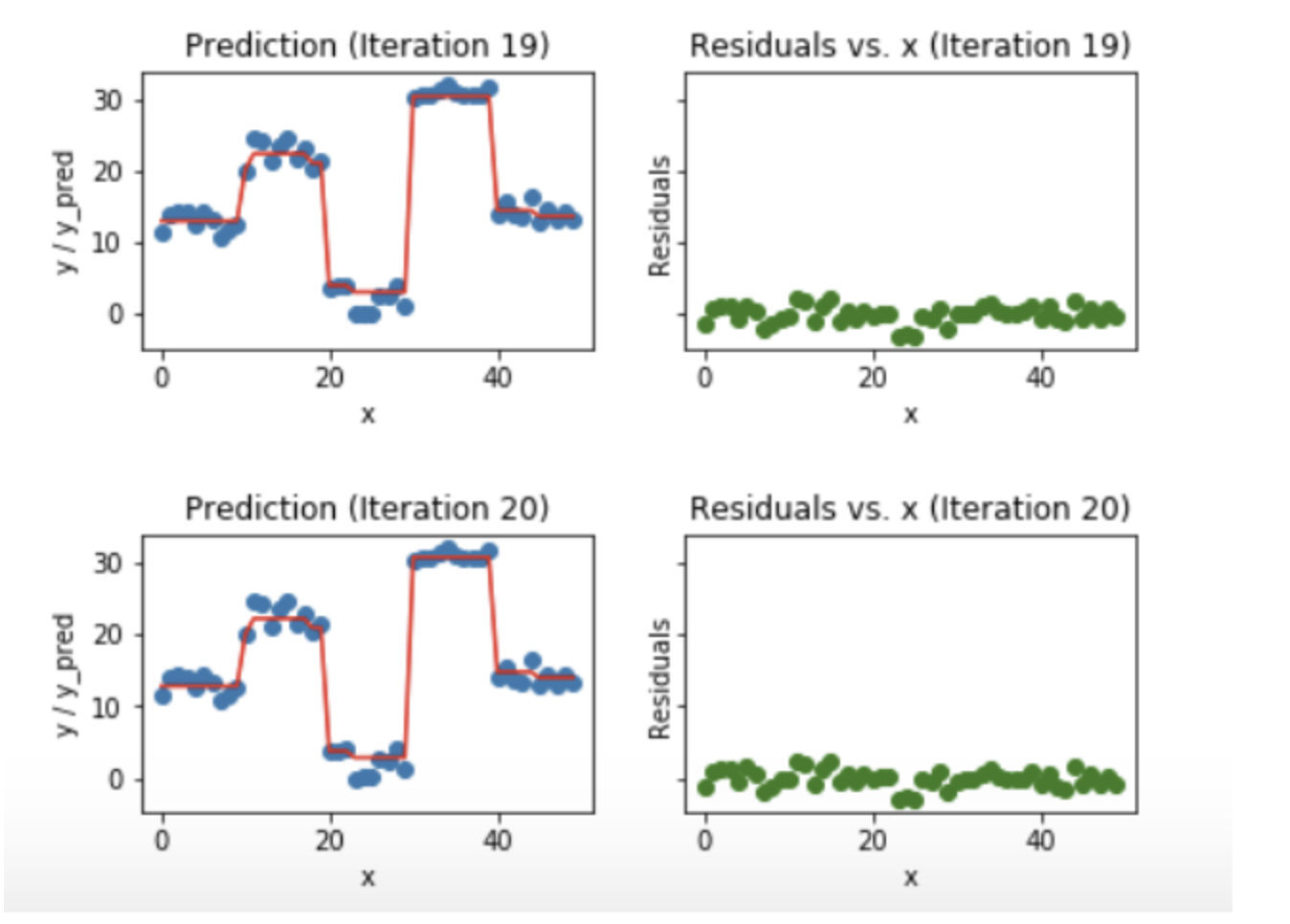

Gradient Boosting is also a boosting algorithm hence it also tries to create a strong learner from an ensemble of weak learners. Lets look at how Gradient Boosting works. XGBoost is more regularized form of Gradient Boosting.

I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog. In the third phase we applied five machine learning models to predict LOS. Decision tree as.

XGBoost is an implementation of the GBM you can configure in the GBM for what base learner to be used. Generally XGBoost is faster than gradient boosting but gradient boosting has a wide range of application. Show activity on this post.

XGBoost trains specifically the gradient boost data and gradient boost decision trees. Well also present a concise comparison among all new algorithms allowing you to quickly understand the main differences between each. There are several types of boosting algorithms like adaptive boosting AdaBoost Gradient Boosting eXtreme Gradient Boosting XGBoost LightGBM and CatBoost Al Daoud 2019The Boosting algorithms are simple and to implement and aim to improve the prediction power by training a sequence of weak models.

GBM is an algorithm and you can find the details in Greedy Function Approximation. A prior understanding of gradient boosted trees is useful. Gradient boosted trees consider the special case where the simple model h is a decision tree.

Since canonical machine learning algorithms assume that the dataset has equal number of samples in each class binary classification became a very challenging task to discriminate the minority class samples efficiently in imbalanced datasets. XGBoost computes second-order gradients ie. Lets look at how Gradient Boosting works.

Boosting builds models from individual so called weak learners in an iterative way. A grid search was utilized to seek optimal model parameters and select the best models on the training set. You are correct XGBoost eXtreme Gradient Boosting and sklearns GradientBoost are fundamentally the same as they are both gradient boosting implementations.

AdaBoost Gradient Boosting and XGBoost. AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. Most of the magic is described in the name.

Most of the magic is described in the name. Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. However there are very significant differences under the hood in a practical sense.

An optimized distributed gradient boosting library XGBoost is flexible scalable and portable. But before we dive into the algorithms lets quickly understand the fundamental concept of Gradient Boosting that is a part of both XGBoost and LightGBM. XGBoost includes clever penalization of trees a proportional shrinking of leaf nodes newton boosting extra randomization parameter.

EXtreme Gradient Boosting XGBoost Gradient Boosting Decision Tree GBDT RF LinearSVM and Deep Neural Network DNN. This study demonstrates the efficacy of using eXtreme Gradient Boosting XGBoost as a state-of-the-art machine learning ML model to forecast. The algorithm is similar to Adaptive BoostingAdaBoost but differs from it on certain aspects.

XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications. With XGBoost trees can have variable nodes and trees calculated with less evidence have smaller left weights. In this case there are going to be.

Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor. Boosting is a method of converting a set of weak learners into strong learners. While regular gradient boosting uses the loss function of our base model eg.

XGBoost delivers high performance as compared to Gradient Boosting.

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Hackernoon

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Xgboost Algorithm Long May She Reign By Vishal Morde Towards Data Science

Deciding On How To Boost Your Decision Trees By Stephanie Bourdeau Medium

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

Exploring Xg Boost Extreme Gradient Boosting From The Genesis

0 comments

Post a Comment